The workflow enabled a senior analyst to rapidly triage and prioritize alerts, moving top priority alerts to the top of the list and notifying other analysts.

Rapid triage workflow

Overview

Impact

54%

decrease in analyst cycle time on

customer deliverables

(24min to 11min)

The problem

The SOC typically has between 150-200 alerts in the queue during the day. The problem for a SOC analyst is, “which alert, out of over 100, should I triage first?”

Biggest research insight

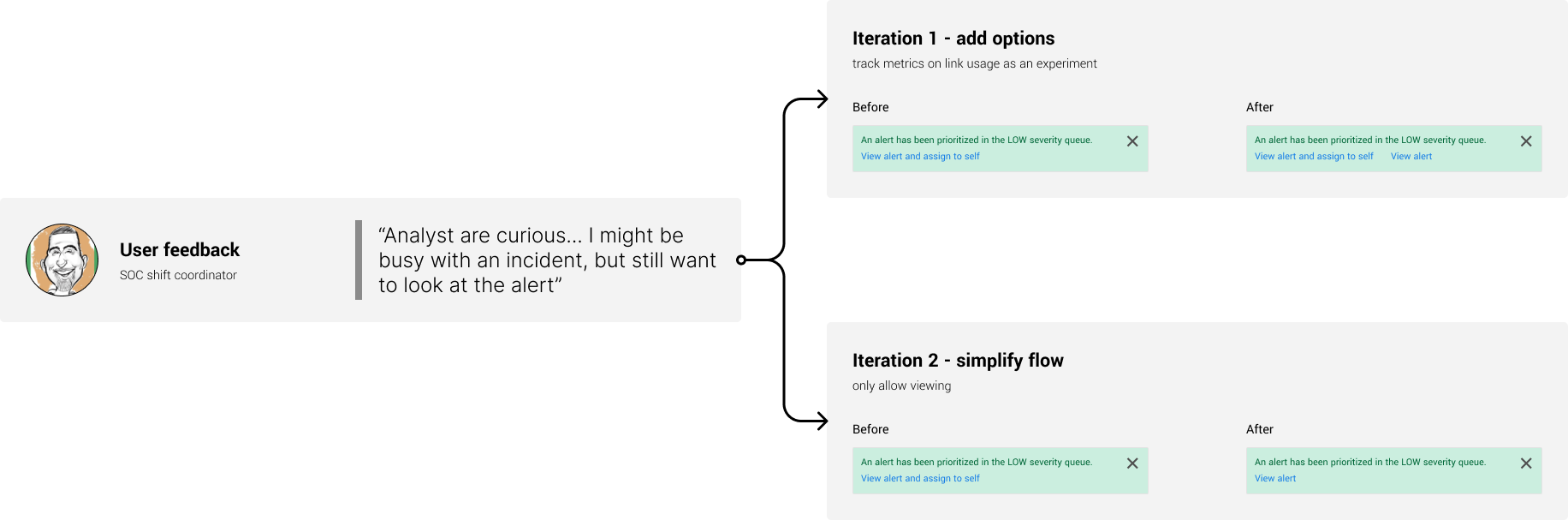

Analyst curiosity around top priority alerts could’ve derailed the flow around the happy path.

My role

Lead UX designer

Contextual inquiry

Sketching

Prototyping

Lean UX

The users

Expel SOC analysts

Persona quote - “I always ask myself, how could this bite us in the ass if we’re wrong?”

Internal user who spends 10 hours per day in Workbench

Customer analysts

Persona quote - “I want to get Expel more context so they make the right decision”

External user who monitors numerous security products beyond Workbench

| Triage | Process of determining priority for security alerts |

| Workbench | Expel's platform that ingests customer security alerts and where Expel SOC analysts triage alerts |

| SOC | Acronym: "security operations center" |

| Cycle time | Time from when an alert appears in Workbench to when an alert's closed |

| Investigations/incidents | Deliverables for customers when they might have had a security breach |

Terminology

Timeframe

5 weeks - UX work

4 months - UX & Eng/QA work

Contributors

Nabeel Zafar - Lead Eng

Anthony Via - PM

Fred Mottey - QA

Jack Howard - Eng

Ross Tew - Eng

Final concept

Prioritizing an alert

Top priority alerts automatically move to the top of the list and send a toast notification to other users.

Customer analyst view

Customers, who have far fewer alerts in their queue, often wonder why it takes hours to triage their alerts. When an analyst de-prioritizes an alert, a banner is automatically added explaining why.

De-prioritizing an alert

Bottom priority alerts automatically move to the bottom of the list. Analysts have time to fully triage these alerts in several hours.

The process

Initial sketch concept

After a stalled design sprint, I put together a loose sketch with a very different approach to the existing alerts page. I brought it up a couple times in presentations, but it didn’t generate much interest from stakeholders.

Existing alerts page

Future vision sketch proposal

Initial ask & contextual inquiry

The initial ask came in as a feature request, with specific implementation. Through contextual inquiry I was able to uncover the underlying design question, “how might we prioritize a list of hundreds of items?”

Internal hackathon proposal

During a quarterly 2 day internal hackathon, I decided to bring a lean version of “Super list” to life. I presented the problem, contextual inquiry I conducted, and a demo of what rapid triage might look like.

Scoping conversation with Eng

I often provided context to engineering around what we expected from users and was flexible around scoping features in public channels, so PM could also weigh in if needed.

Initial plan for rapid triage workflow

I took inspiration from the ride-share experience for drivers.

SOC feedback during development

In addition to contextual inquiry with SOC analysts I also consulted with shift coordinators, who had a different perspective than the “boots on the ground” operators.

SOC analyst feedback.

“I really feel like you “get” it which makes me super happy... I LOVE the ‘top priority’ queue feature”

“You really heard the needs of the SOC and problem solved and MADE IT A REALITY!!”

My reflection

What did I learn?

A succinct presentation showing the problem, summarizing the research I did, and demoing a solution builds buy-in and momentum.

What was I wrong about?

Although over 99% of all alerts are benign and thus should be de-prioritized, I underestimated analyst concern around quickly making a public decision to label an alert as benign.

What would I do differently?

For the internal hackathon, I would’ve mocked out the full “super list” in UI to inspire even more forward thinking about the alerts page.